Prashanth Thattai

R&D Scientist in Human-AI co-creativity

specializing in assistive generative AI technologies and

UX tools that provide people with greater ability to

customize AI for their creative tasks.

My work cuts across interdisciplinary

domains of AI, Software engineering, Human-Computer

Interaction and Art (specifically music). I deploy

interactive AI-based software within context of

real-time co-creation (e.g., music duets) with people to

study their experiences of partnership, and trust as

they fit AI systems into their practice,

I have a strong academic track record of publishing in

Computer Science and Music venues such as ICCC, NIME,

and Creativity and Cognition, and actively write

independent grants scaling up research to industry

prototypes.

Grants

Experiences

Professional Experience

Course Leader

Current

Goldsmiths, University of London

Research focus on generative audio technologies for AI presence in VR, gaming, and EdTech.

Course Leader for MOOCs, DSP, and computer graphics

Researcher & Associate Lecturer

Jan - June 2023

University of the Arts, London

Developed text-based strategies for generating with AI audio technology in collaboration with a team of researchers, UI developers, and software engineers

POSTDOC

Feb - Nov 2022

DE MONTFORT UNIVERSITY, UK

Leveraged software design expertise to code audio pipelines that translate a composer’s intent into user experience in interactive environments

GRANT LEAD

2021 - 2022

ARTS X TECH LAB, NATIONAL ARTS COUNCIL, SG

Created a music algorithm to convert Max/MSP data into melodic harmony while optimizing for real-time performance

RESEARCH ENGINEER

2020 - 2022

NATIONAL UNIVERSITY OF SINGAPORE, SG

Designed and developed Python-based software tools to automatically generate annotated audio datasets for a community of audio researchers

Education

PHD

2015 - 2020

NATIONAL UNIVERSITY OF SINGAPORE, SG

6 publications focused on user experience design, AI architecture design, and architectural trade-offs in real-time music systems

MA

2012 - 2015

NATIONAL UNIVERSITY OF SINGAPORE, SG

Dissertation on algorithm design for computational creativity in music rhythm generation

B.ENGG, COMPUTER SCIENCE & ENGINEERING

2008 - 2012

NATIONAL Institute of Technology, Trichy, India

Music annotation using Digital Signal Processing algorithms

Papers

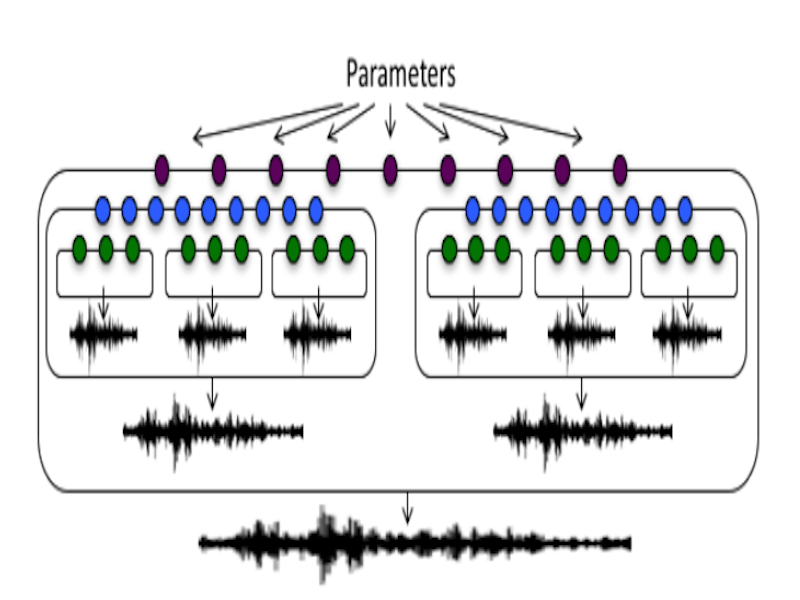

Wyse, L., and Thattai, P. "Syntex: parametric audio texture datasets for conditional training of instrumental interfaces." in New Interfaces for Musical Expression, 2022.

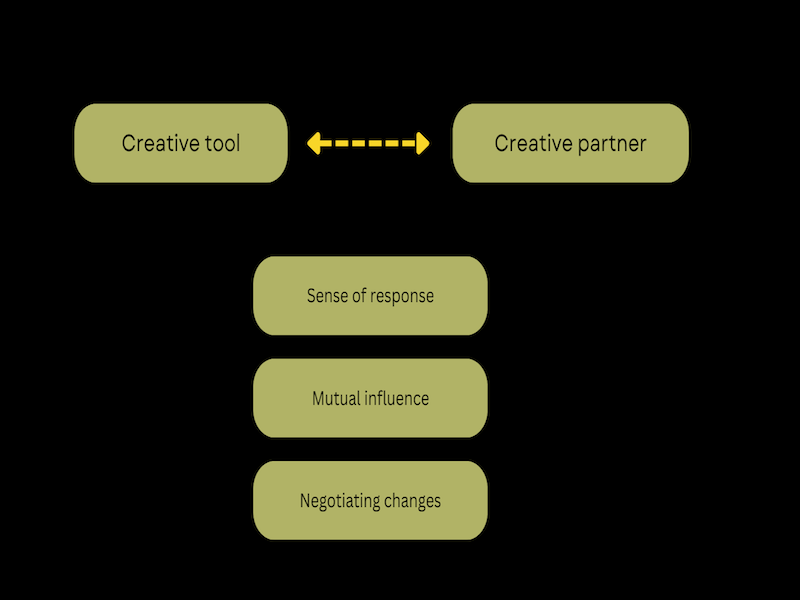

Koch, J., Ravikumar, P., & Calegario, F. "Agency in Co-Creativity: Towards a Structured Analysis of a Concept." In ICCC 2021-12th International Conference on Computational Creativity (Vol. 1, pp. 449-452). Association for Computational Creativity.

-

Ravikumar, P.T., and Patel, D. "Non-anthropomorphic evaluation of musical cocreative systems", In 11th International Conference on Computational Creativity, 2020

-

Kantosalo, A., Ravikumar, P. T., Grace, K., & Takala, T. (2020, September). Modalities, Styles and Strategies: An Interaction Framework for Human-Computer Co-Creativity. In ICCC (pp. 57-64).

Ravikumar, P. T., and Wyse, L. "MASSE: A system for music action selection through state evaluation", In 10th International Conference on Computational Creativity, ICCC.

Ravikumar, P. T., McGee, K., and Wyse, L. "Back to the experiences empirically grounding the development of musical co-creative partners in co-experiences", In 6th International Workshop on Musical Metacreation. 9th International Conference on Computational Creativity, ICCC (pp. 1-7).

-

Ravikumar, P. T. "Notational Communication with Co-creative Systems: Studying Improvements to Musical Coordination." In Proceedings of the 2017 ACM SIGCHI Conference on Creativity and Cognition (pp. 518-523).

-

Mitchell, A., Yew, J., Thattai, P., Loh, B., Ang, D., and Wyse, L. "The temporal window: explicit representation of future actions in improvisational performances." In Proceedings of the 2017 ACM SIGCHI Conference on Creativity and Cognition (pp. 28-38).